Hello guys,

I'm trying to adjust a detail of an animation with Kinect, to achieve not the expected result, will try to explain, already sorry, my native language is Portuguese ...

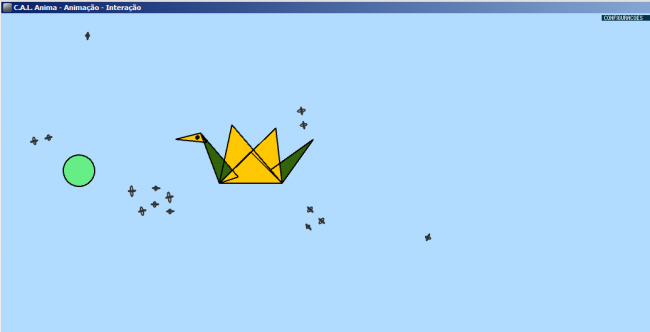

Below is an animation of a print (based on obtivos examples OpenProcessing - do not put links), where I am making some adjustments.

It works like this:

As the mouse moves the bird as captured coordinates when enabled kinect, move the mouse is substiuído by kinect coordinates through the Robot class ...

When capturing the image (kinect) at a distance, okay, okay ... kinect capture coordinated, and is made reescala to move across the screen.

My problem is you need to track your hand when you're sitting, because the objective of the project is to benefit those who are in a wheel chair for example, with little mobility.

With Kinect seems we have a good chance to use, but ran into this detail, that the model was wearing, only captures the coordinates standing.

But I found in other code (If necessary, put the links):

The question then is: When you increase the screen size (larger than the resolution of the Kinect), an error occurs, from what I saw he can not do the loop and an area larger than the kinect.

Below is the sample code ...

I do not know if get the idea, anyway thank I thank the attention.

Thank you,

Trecho do código:

void atualizaKinect(){

kinect.update(); // update the camera

if (rastreamentoIMG){

// let's be honest. This is kind of janky. We're just setting a huge(ish) value for the initial value of closestValue.

// This totally works but it's not very elegant IMO.

closestValue = 8000;

// this initializes and places the values from the kinect depth

// image into our single dimensional array.

int [] depthValues = kinect.depthMap();

/*for (int j = 0; j <= depthValues.length; j++){

depthValues[j]= int(map(depthValues[j], 0, w, 0, 640));

}*/

// this breaks our array down into rows

for(int y = 0; y < 480; y++ ){

// this breaks our array down into specific pixels in each row

for(int x = 0; x < 640; x++){

// this pulls out the specific array position

int i = x + y * 640;

int current = depthValues[i];

//now we're on to comparing them!

if ( current > 0 && current < closestValue){

closestValue = current;

closestX = x;

closestY = y;

}

}

// draw the depth image on the screen

image(kinect.depthImage(), 0, 0);

//if (desenhaEsqueleto){

// draw that swanky red circle identifying it

fill(255, 0, 0); //This sets the colour to red

ellipse(closestX, closestY, 25, 25);

//Reescala pX e pY, em relação ao ponto capturado pelo Kinect, e o tamanho da tela para ajuste

pX = int(map(closestX, 0, 640, 0, w));

pY = int(map(closestY, 0, 480, 0, h));

//}

}

} else {

kinectDepth = kinect.depthImage(); // get Kinect data

userID = kinect.getUsers(); // get all user IDs of tracked users

// loop through each user to see if tracking

for(int i=0;i<userID.length;i++){

// if Kinect is tracking certain user then get joint vectors

if(kinect.isTrackingSkeleton(userID[i])){

// get confidence level that Kinect is tracking head

confidence = kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_HEAD, confidenceVector);

// if confidence of tracking is beyond threshold, then track user -- Compara valor Capturado ( "Calibragem" )

if(confidence > confidenceLevel){

// get 3D position of head/hand/foot

kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_HEAD, headPosition);

kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_RIGHT_HAND, handPositionRight);

kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_LEFT_HAND, handPositionLeft);

kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_RIGHT_FOOT, footPositionRight);

kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_LEFT_FOOT, footPositionLeft);

// convert real world point to projective space

kinect.convertRealWorldToProjective(headPosition,headPosition);

kinect.convertRealWorldToProjective(handPositionRight, handPositionRight);

kinect.convertRealWorldToProjective(handPositionLeft, handPositionLeft);

kinect.convertRealWorldToProjective(footPositionRight, footPositionRight);

kinect.convertRealWorldToProjective(footPositionLeft, footPositionLeft);

// create a distance scalar related to the depth in z dimension - get 3D position of hand

distanceScalarHead = 525/headPosition.z;

distanceScalarHandRight = 525/handPositionRight.z;

distanceScalarHandLeft = 525/handPositionLeft.z;

distanceScalarFootRight = 525/footPositionRight.z;

distanceScalarFootLeft = 525/footPositionLeft.z;

//Atualiza variáves globais, pX e pY, para substituir o movimento do Mouse com o Kinect

//pX = int(handPositionRight.x);

//pY = int(handPositionRight.y);

//Reescala pX e pY, em relação ao ponto capturado pelo Kinect, e o tamanho da tela para ajuste

pX = int(map(int(handPositionRight.x), 0, 640, 0, w));

pY = int(map(int(handPositionRight.y), 0, 480, 0, h));

distanciaHandRight = dist(posX, posY, handPositionRight.x, handPositionRight.y);

distanciaHead = dist(posX, posY, headPosition.x, headPosition.y);

stroke(userColor[(i)]); //Change draw color based on hand id#

fill(userColor[(i)]); //Fill the ellipse with the same color

//println("pX/pY - 1 : " + pX + " - " + pY);

if (desenhaEsqueleto) drawSkeleton(userID[i]); //Draw the rest of the body

}

}

}

}

}