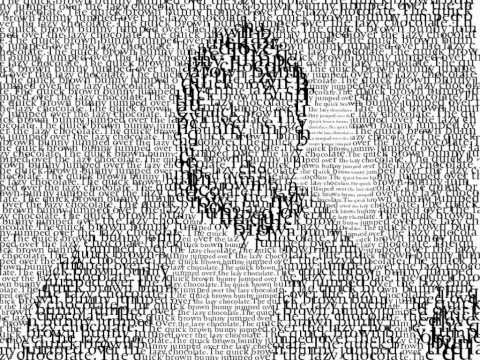

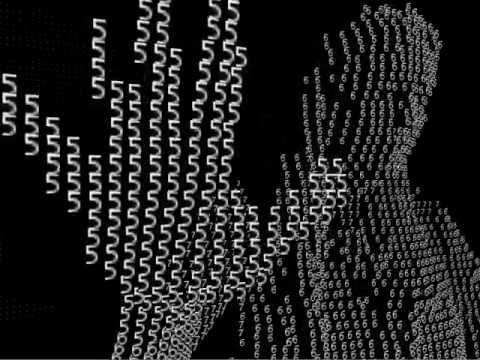

Hello, I'm new to programming with Processing. Trying to make a code for my art bachelor degree. What I want to do: make the interactive screen, filled with letters(text), with a live cam recording what's happening in front, detecting motion. When there is a motion letter that meets motion line(human body contours) flips position with a letter from the side with motion come from.

for that as a base, I used raindrops sketch and Shiffman's Example 16-13: Simple motion detection.

But now I'm getting a gray screen and letters appear non-stop. Did I mess up something with arrays? should text be put not in a string? I read about Kinect but not sure if it would help at this point. Hope to get hints or something that to do next :]

import processing.video.*;

import java.awt.Frame;

import java.awt.Image;

import java.text.*;

Capture cam;

Letter[][] drops;

int dropsLength;

PImage prevFrame;

int sWidth = 1280;

int sHeight = 720;

String inputString = "Įsivaizduokime pasaulį, kur visi viską žino tiksliai, ir niekada neklysta. Niekam nekiltų abejoių, koks bus rytoj oras, kaip išsaugoti tirpstačius ledynus, ar koks visatos dydis. Žvelgiant į krentantį kamuoliuką kiekvienas galėtų pasakyti: - O šito kamuoliuko kritimo greitis 6,325 m/s. - Tikrai taip - atsakytų kitas. Ir viskas, daugiau nebebūtų jokių diskusijų, ieškojimų, matavimų. Su absoliučiu žinojimu gyvenimas taptų nebeįdomus, monotoniškas, tokiu atveju net progresas neįmanomas. Kai pradedu taip galvoti, džiaugiuosi nežinojimu, diskusijų galimybe, tiesos ieškojimu. Klaida suvokiama kaip neišvengiamas procesas teisybės ieškojime leidžia drąsiai žengti į praktikos sritį, nebijoti suklysti, o neteisingus procesus paversti progresu, žingsneliu link tikslo. Menininkas nebėra tas genijus, kuris turi sukurti kažką naujo, tarsi nežemiško, keičiančio visą mūsų suvokimą. Jo praktikos esmė eksperimentuoti ir klysti, organizuoti jau esamomis reikšmėmis ir kurti nau";

char[] inputLetters;

int dupStrings = 3; //times to dublicate text

int k = 800;

float threshold = 50;

void settings() {

size(1280, 720);

}

void setup() {

String[] cameras = Capture.list();

drops = new Letter[dupStrings][inputString.length()]; //[inputString.length()];

int wspace = 50;

inputLetters = new char[inputString.length()];

splitString();

// first row hight

int addLineHeight = 30;

for (int i = 0; i < dupStrings; i++) {

for (int j = 0; j < inputLetters.length; j++) {

if (inputLetters[j] < k + wspace ){

Letter testLetter = new Letter(inputLetters[j]);

testLetter.x = wspace;

testLetter.y = addLineHeight;

drops[i][j] = testLetter;

wspace += 10; //spaces between letters

// new row

if (wspace >= sWidth) {

wspace = 10;

addLineHeight += 40; } // space between

}

else {

addLineHeight += 50;

}

}

}

// cam conect//

if (cameras.length == 0) {

println("There are no cameras available...");

size(400, 400);

exit();

}

else {

cam = new Capture(this, sWidth, sHeight);

cam.start();

cam.loadPixels();

prevFrame = createImage(cam.width, cam.height, RGB);

size(sWidth, sHeight);

}

dropsLength = inputString.length();

}

void captureEvent(Capture cam) {

// Save previous frame for motion detection!!

prevFrame.copy(cam, 0, 0, cam.width, cam.height, 0, 0, cam.width, cam.height);

// Before we read the new frame, we always save the previous frame for comparison!```

prevFrame.updatePixels(); // Read image from the camera

cam.read();

}

void splitString() {

for (int i = 0; i < inputString.length() ; i++) {

inputLetters[i] = inputString.charAt(i);

}

}

void draw() {

loadPixels();

cam.loadPixels();

prevFrame.loadPixels();

// Begin loop to walk through every pixel

for (int x = 0; x < cam.width; x ++ ) {

for (int y = 0; y < cam.height; y ++ ) {

int loc = x + y*cam.width; // Step 1, what is the 1D pixel location

color current = cam.pixels[loc]; // Step 2, what is the current color

color previous = prevFrame.pixels[loc]; // Step 3, what is the previous color

// Step 4, compare colors (previous vs. current)

float r1 = red(current);

float g1 = green(current);

float b1 = blue(current);

float r2 = red(previous);

float g2 = green(previous);

float b2 = blue(previous);

float diff = dist(r1, g1, b1, r2, g2, b2);

// Step 5, How different are the colors?

// If the color at that pixel has changed, then there is motion at that pixel.

if (diff > threshold) {

// If motion, display black

pixels[loc] = color(0);

} else {

// If not, display white

pixels[loc] = color(255);

}

}

}

//Responding to the brightness/color of the screen

for (int i = 0; i < dupStrings; i++) {

for (int j = 0; j < dropsLength; j++) {

if (drops[i][j].y < sHeight && drops[i][j].y > 0) {

int loc = drops[i][j].x + ((drops[i][j].y)-1)*sWidth;

float bright = brightness(cam.pixels[loc]);

if (bright > threshold) {

drops[i][j].dropLetter();

drops[i][j].upSpeed = 1;

}

else {

if (drops[i][j].y > threshold) {

int aboveLoc = loc = drops[i][j].x + ((drops[i][j].y)-1)*sWidth;

float aboveBright = brightness(cam.pixels[aboveLoc]);

if (aboveBright < threshold) {

drops[i][j].liftLetter();

drops[i][j].upSpeed = drops[i][j].upSpeed * 5;

}

}

}

}

else {

drops[i][j].dropLetter();

}

drops[i][j].drawLetter();

cam.updatePixels();

}

}

}

class Letter {

int x;

int y;

int m;

char textLetter;

int upSpeed;

int alpha = 150;

Letter(char inputText) {

x = 100;

y = 100;

textLetter = inputText;

textSize(16);

upSpeed = 1;

}

void drawLetter() {

// if ( m < 1) {

fill(150, 150, 150 , alpha);

text(textLetter, x, y);

}

void letterFade() {

alpha -= 5;

if(alpha <= 0) {

y = int(random(-350, 0));

alpha = 255;

}

}

void dropLetter() {

// y++;

if (y > 730) {

letterFade();

}

}

void liftLetter() {

int newY = y - upSpeed;

if (newY >= 0) {

y = newY;

}

else {

y = 0;

}

}

}